Wk 5 - Hypothesis & Power

PSYC 640 - Fall 2023

Lessons from Lab 1

Getting data into R is surprisingly hard

The console doesn’t come with you

Work together

Professor gets too excited about R

Sampling Revisited

We use features of the sample (statistics) to inform us about features of the population (parameters). The quality of this information goes up as sample size goes up – the Law of Large Numbers. The quality of this information is easier to defend with random samples.

All sample statistics are wrong (they do not match the population parameters exactly) but they become more useful (better matches) as sample size increases.

Some Terminology

| Population | Sample |

|---|---|

| \(\mu\) (mu) = Population Mean | \(\bar{X}\) (x bar) = Sample Mean |

| \(\sigma\) (sigma) = Population Standard Deviation | \(s\) = \(\hat{\sigma}\) = Sample Standard Deviation |

| \(\sigma^2\) (sigma squared) = Population Variance | \(s^2\) = \(\hat{\sigma^2}\) = Sample Variance |

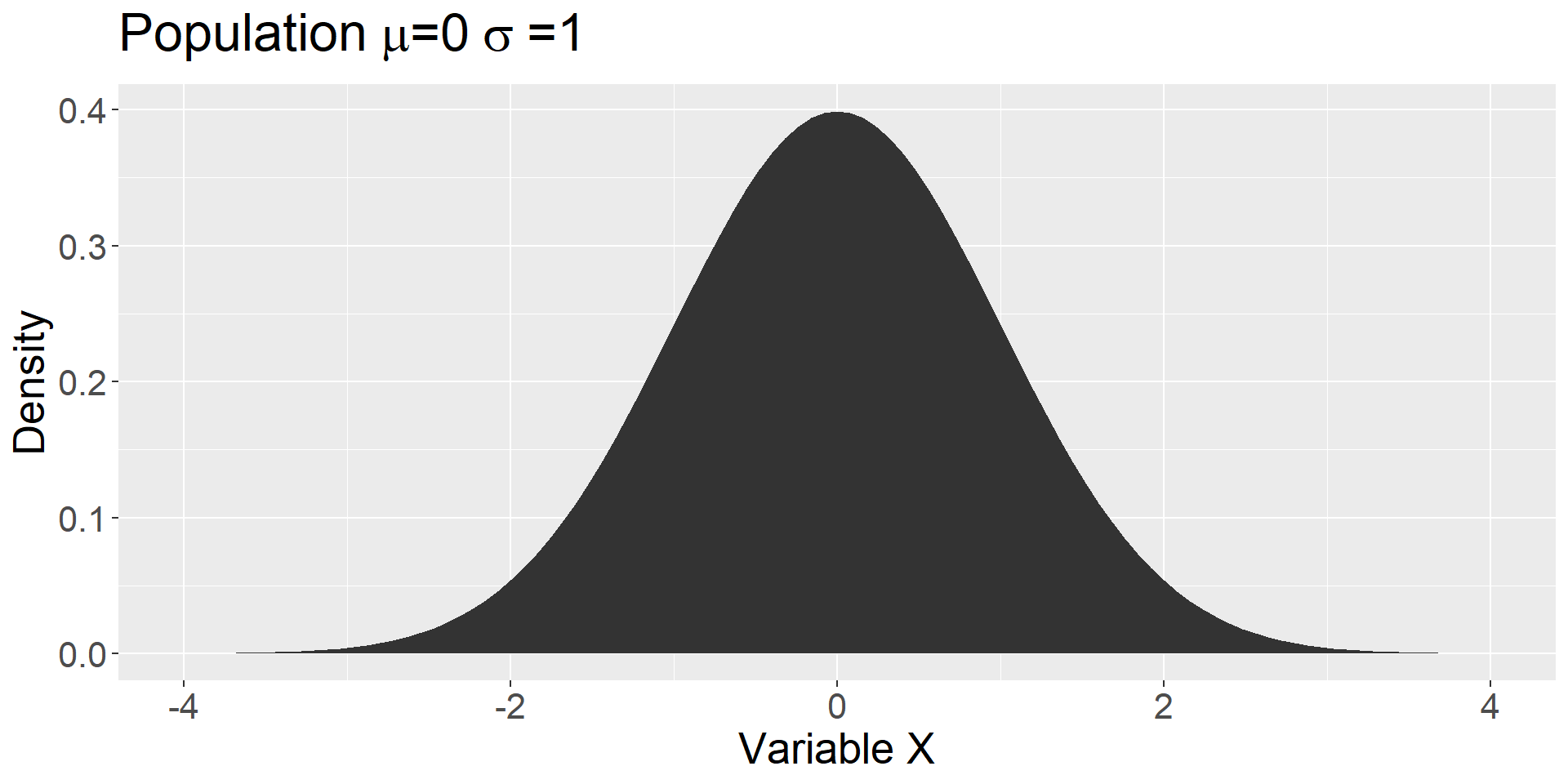

Population distribution

The parameters of this distribution are unknown. We use the sample to inform us about the likely characteristics of the population.

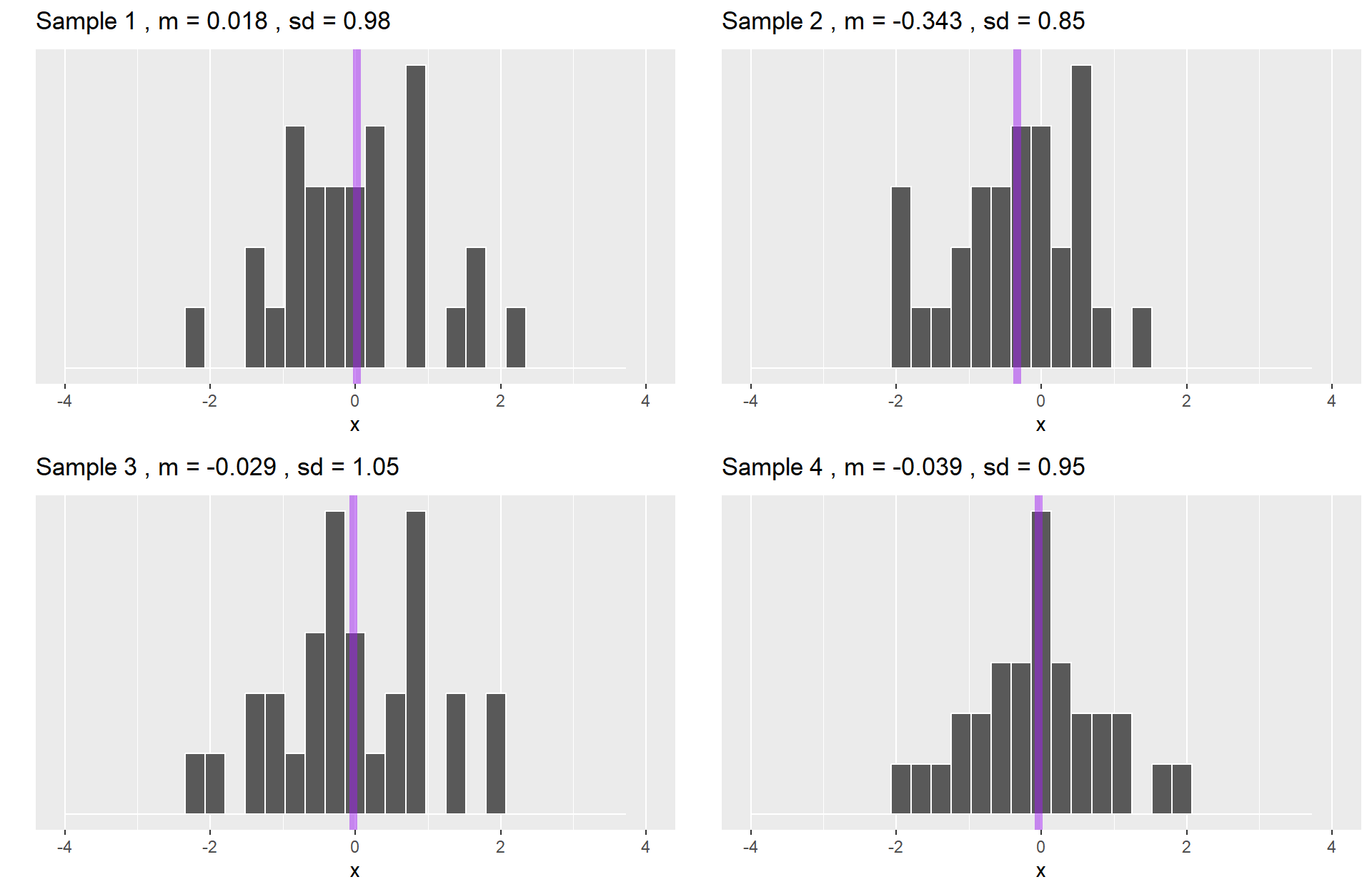

Samples from the population.

Each sample distribution will resemble the population. That resemblance will be better as sample size increases: The Law of Large Numbers.

Statistics (e.g., mean) can be calculated for any sample.

Code

library(ggpubr) #for multiple plots

sample_size = 30

set.seed(101919)

for(i in 1:4){

sample = rnorm(n = sample_size)

m = round(mean(sample),3)

s = round(sd(sample),2)

p = data.frame(x = sample) %>%

ggplot(aes(x = x)) +

geom_histogram(color = "white") +

geom_vline(aes(xintercept = mean(x)),

color = "purple", size = 2, alpha = .5)+

scale_x_continuous(limits = c(-4,4)) +

scale_y_continuous("", breaks = NULL) +

labs(title = as.expression(bquote("Sample"~.(i)~", m ="~.(m)~", sd ="~.(s))))

assign(paste0("p",i), p) +

theme(text = element_text(size = 20))

}

ggarrange(p1,p2,p3,p4, ncol =2, nrow = 2)

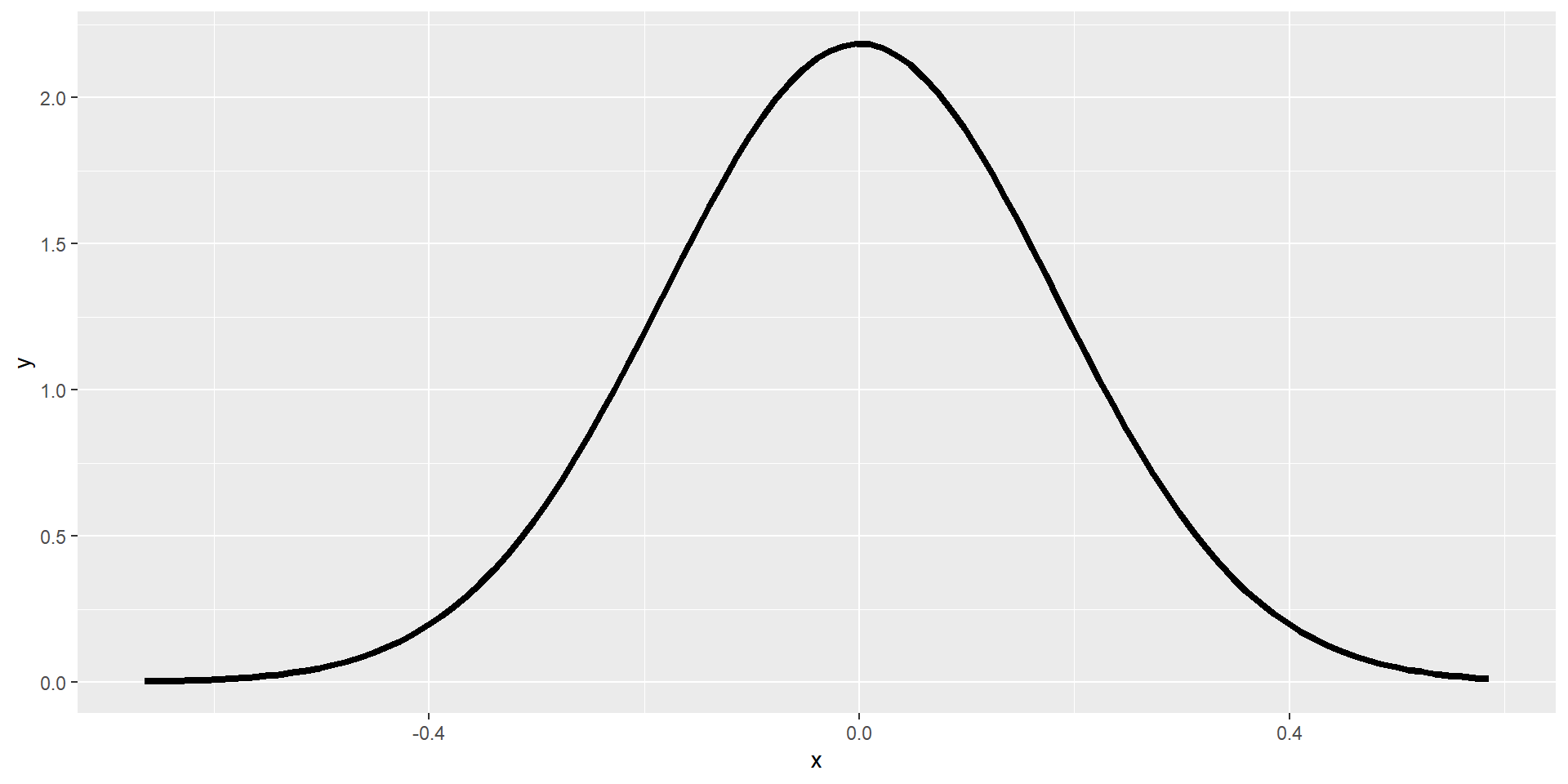

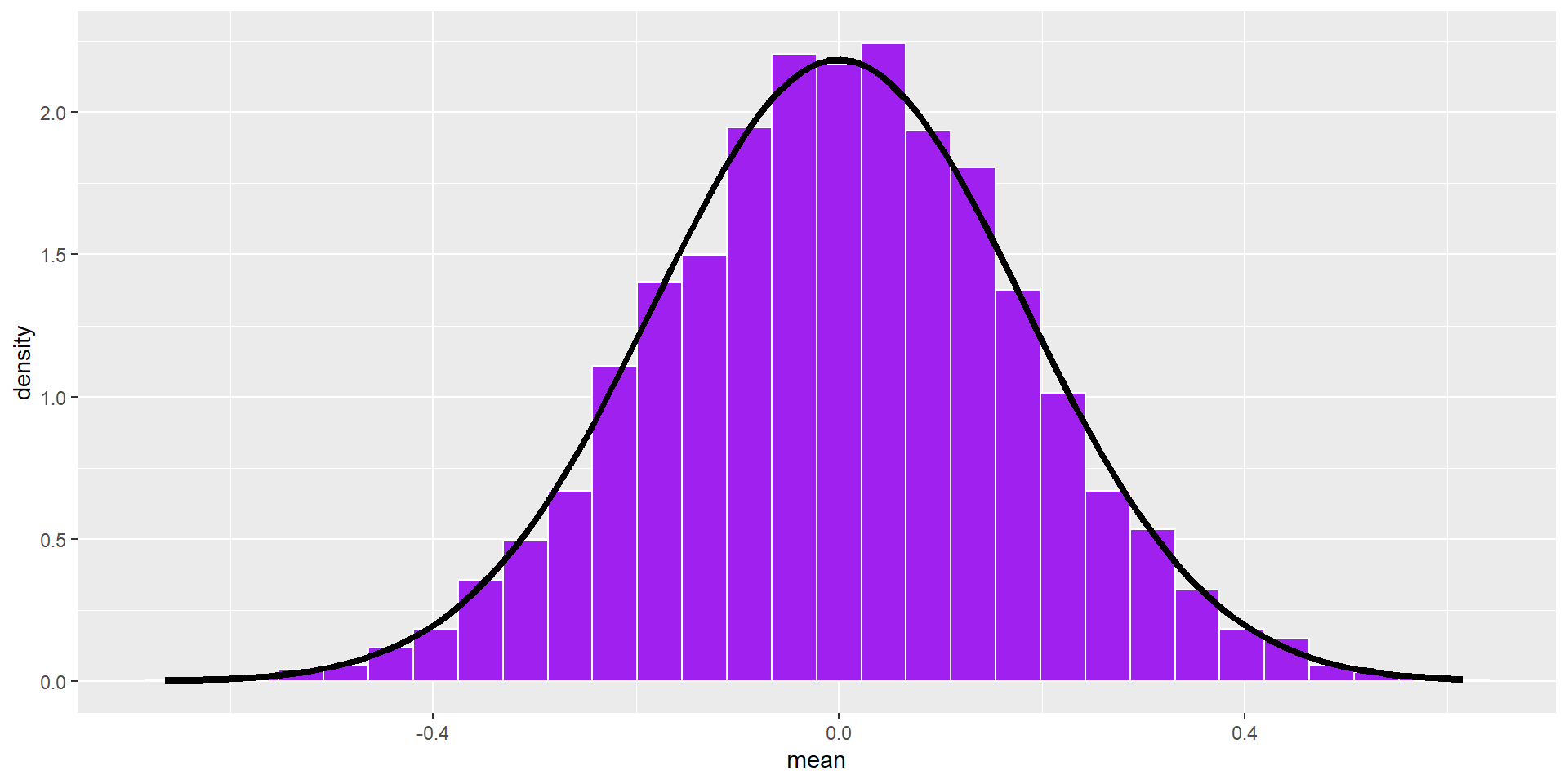

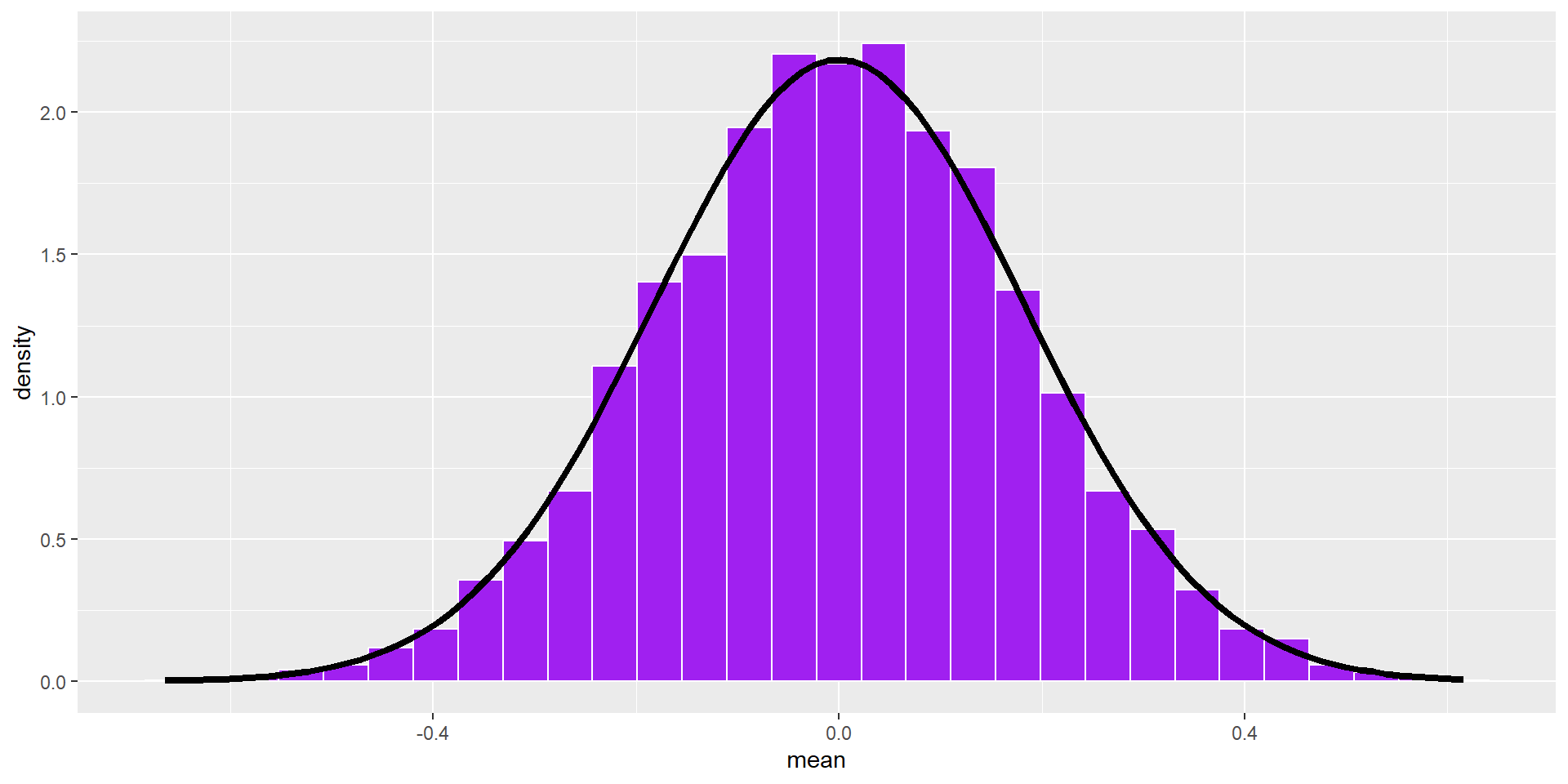

The statistics from a large number of samples also have a distribution: the sampling distribution.

By the Central Limit Theorem, this distribution will be normal as sample size increases.

Code

reps = 5000

means = rep(0, reps)

se = 1/sqrt(sample_size)

set.seed(101919)

for(i in 1:reps){

means[i] = mean(rnorm(n = sample_size))

}

data.frame(mean = means) %>%

ggplot(aes(x = mean)) +

geom_histogram(aes(y = ..density..),

fill = "purple",

color = "white") +

stat_function(fun = function(x) dnorm(x, mean = 0, sd = se), inherit.aes = F, size = 1.5)

This distribution has a standard deviation, called the standard error of the mean. Its mean converges on \(\mu\).

We don’t actually have to take a large number of random samples to construct the sampling distribution. It is a theoretical result of the Central Limit Theorem. We just need an estimate of the population parameter, \(s\), which we can get from the sample.

Code

reps = 5000

means = rep(0, reps)

se = 1/sqrt(sample_size)

set.seed(101919)

for(i in 1:reps){

means[i] = mean(rnorm(n = sample_size))

}

data.frame(mean = means) %>%

ggplot(aes(x = mean)) +

geom_histogram(aes(y = ..density..),

fill = "purple",

color = "white") +

stat_function(fun = function(x) dnorm(x, mean = 0, sd = se), inherit.aes = F, size = 1.5)

We don’t actually have to take a large number of random samples to construct the sampling distribution. It is a theoretical result of the Central Limit Theorem. We just need an estimate of the population parameter, \(s\), which we can get from the sample.

The sampling distribution of means can be used to make probabilistic statements about means in the same way that the standard normal distribution is used to make probabilistic statements about scores.

For example, we can determine the range within which the population mean is likely to be with a particular level of confidence.

Or, we can propose different values for the population mean and ask how typical or rare the sample mean would be if that population value were true. We can then compare the plausibility of different such “models” of the population.

The key is that we have a sampling distribution of the mean with a standard deviation (the Standard Error of the Mean) that is linked to the population:

\[SEM = \sigma_M = \frac{\sigma}{\sqrt{N}}\]

We do not know \(\sigma\) but we can estimate it based on the sample statistic:

\[\hat{\sigma} = s = \sqrt{\frac{1}{N-1}\sum_{i=1}^N(X-\bar{X})^2}\]

\[\hat{\sigma} = s = \sqrt{\frac{1}{N-1}\sum_{i=1}^N(X-\bar{X})^2}\]

This is the sample estimate of the population standard deviation. This is an unbiased estimate of \(\sigma\) and relies on the sample mean, which is an unbiased estimate of \(\mu\).

\[SEM = \sigma_M = \frac{\hat{\sigma}}{\sqrt{N}} = \frac{\text{Estimate of pop SD}}{\sqrt{N}}\]

The sampling distribution of the mean has variability, represented by the SEM, reflecting uncertainty in the sample mean as an estimate of the population mean.

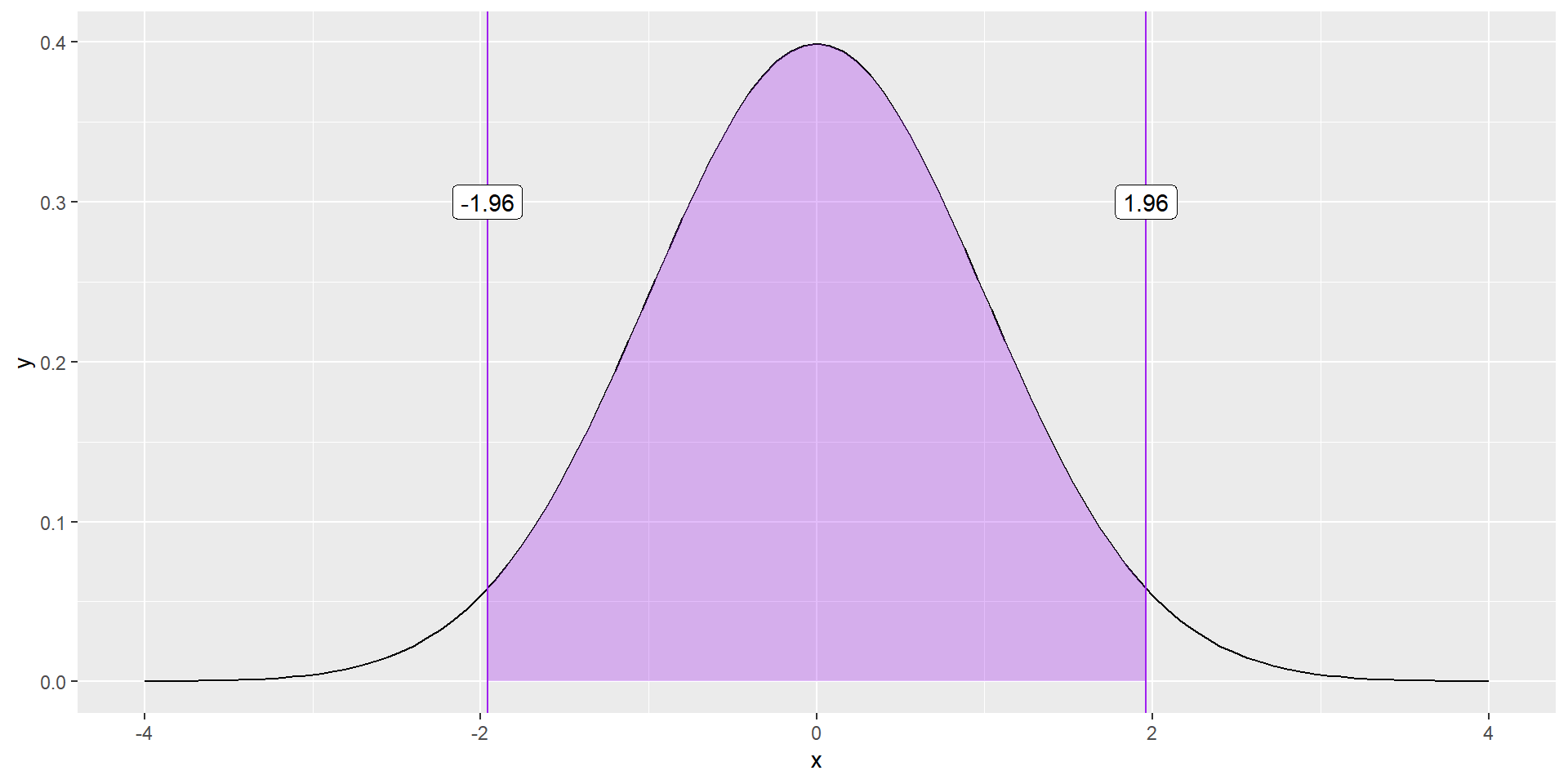

The assumption of normality allows us to construct an interval within which we have good reason to believe a sample mean will fall if it comes from a particular population:

\[\bar{X} - (1.96\times SEM) \leq \mu \leq \bar{X} + (1.96\times SEM) \]

\[\bar{X} - (1.96\times SEM) \leq \mu \leq \bar{X} + (1.96\times SEM) \]

This is referred to as a 95% confidence interval (CI). Note the assumption of normality, which should hold by the Central Limit Theorem, if N is sufficiently large.

The 95% CI is sometimes represented as:

\[CI_{95} = \bar{X} \pm [1.96\frac{\hat{\sigma}}{\sqrt{N}}]\]

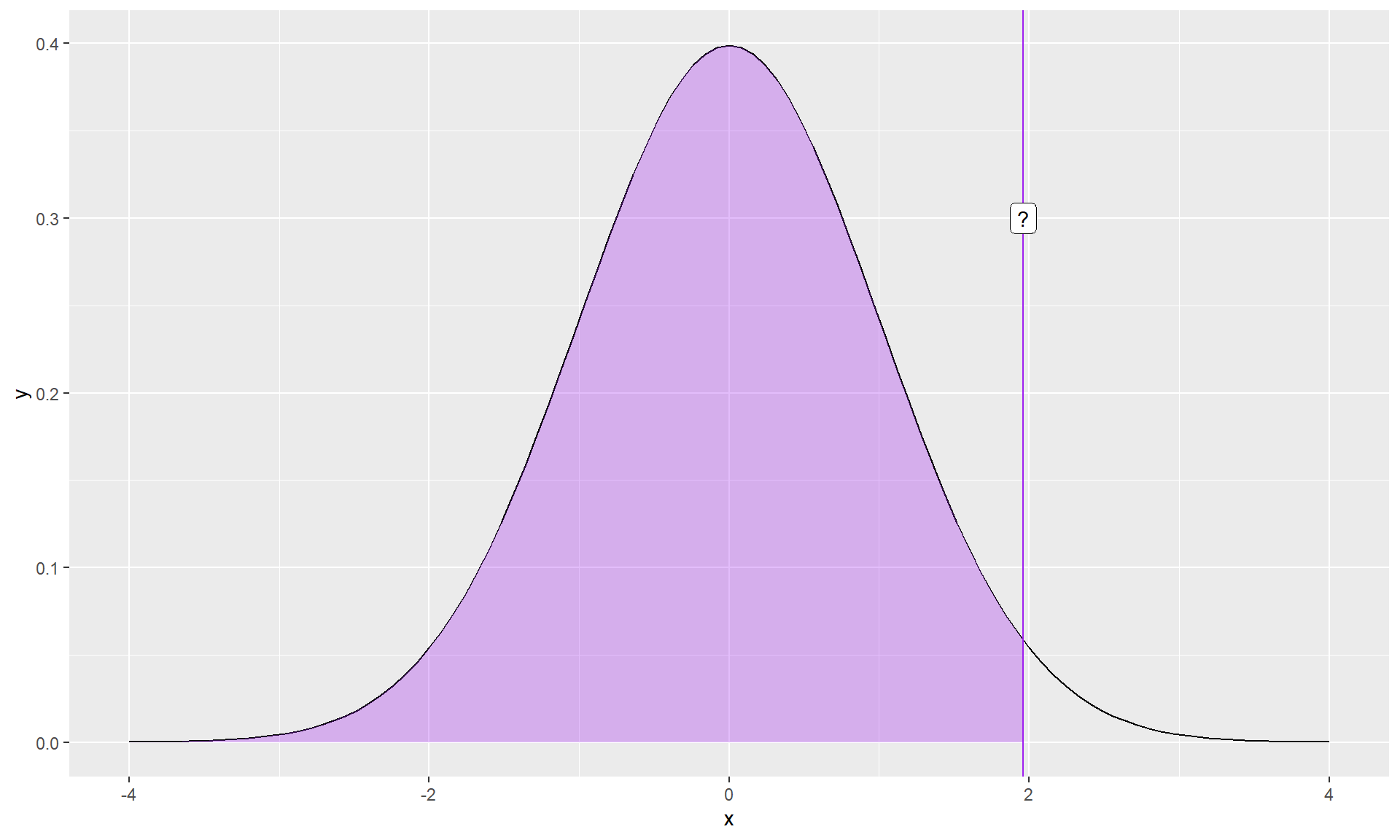

Where does 1.96 come from?

Remember the Empirical Rule (95%)

Code

ggplot(data.frame(x = seq(-4, 4)), aes(x)) +

stat_function(fun = function(x) dnorm(x)) +

stat_function(fun = function(x) dnorm(x),

xlim = c(-1.96, 1.96), geom = "area", fill = "purple", alpha = .3) +

geom_vline(aes(xintercept = 1.96), color = "purple")+

geom_vline(aes(xintercept = -1.96), color = "purple") +

geom_label(aes(x = 1.96, y = .3, label = "1.96"))+

geom_label(aes(x = -1.96, y = .3, label = "-1.96"))

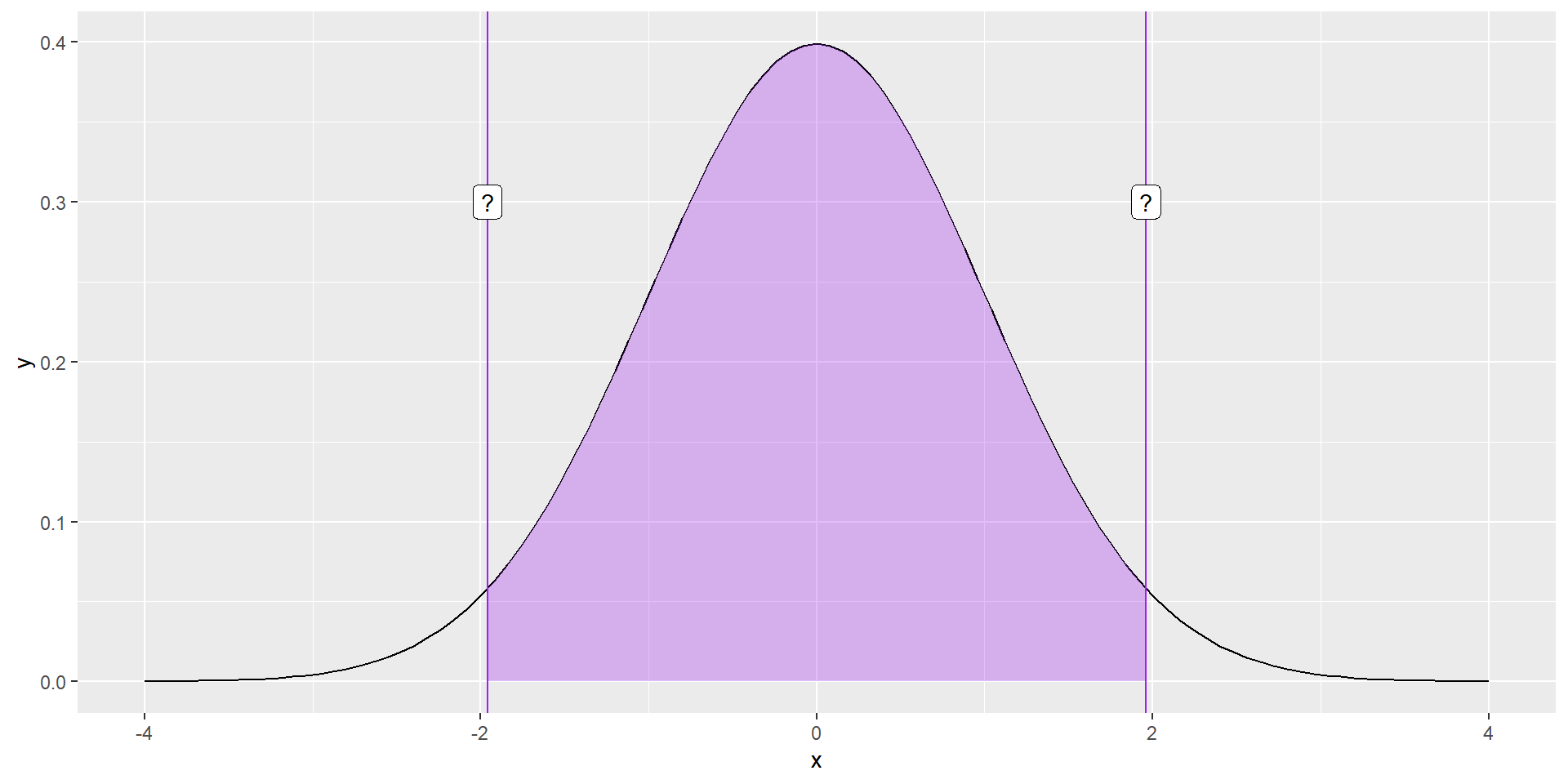

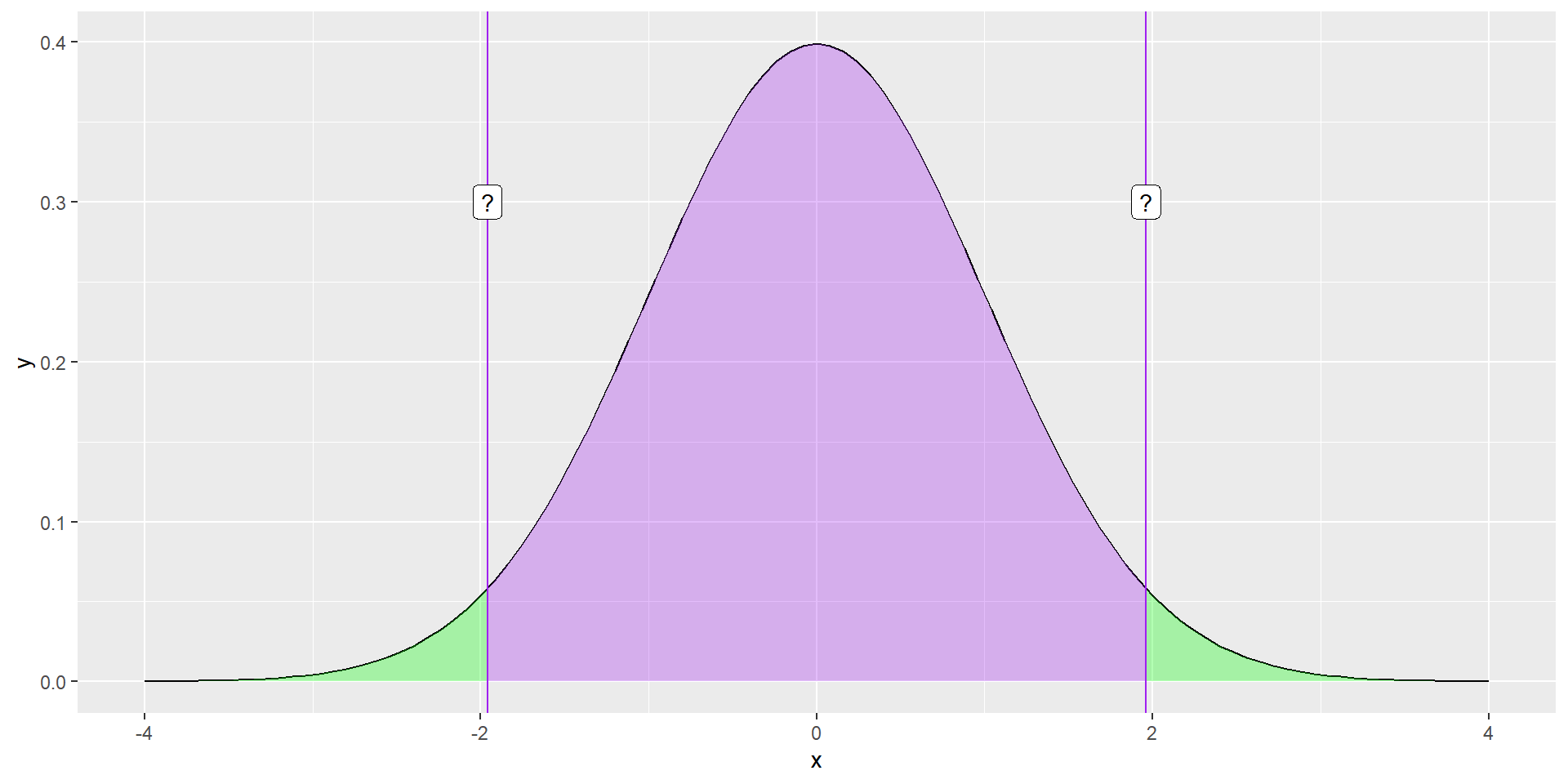

What if you didn’t know the value?

Code

ggplot(data.frame(x = seq(-4, 4)), aes(x)) +

stat_function(fun = function(x) dnorm(x)) +

stat_function(fun = function(x) dnorm(x),

xlim = c(-1.96, 1.96), geom = "area", fill = "purple", alpha = .3) +

geom_vline(aes(xintercept = 1.96), color = "purple")+

geom_vline(aes(xintercept = -1.96), color = "purple") +

geom_label(aes(x = 1.96, y = .3, label = "?"))+

geom_label(aes(x = -1.96, y = .3, label = "?"))

What if you didn’t know the value?

Code

ggplot(data.frame(x = seq(-4, 4)), aes(x)) +

stat_function(fun = function(x) dnorm(x)) +

stat_function(fun = function(x) dnorm(x),

xlim = c(-1.96, 1.96), geom = "area", fill = "purple", alpha = .3) +

stat_function(fun = function(x) dnorm(x),

xlim = c(-4, -1.96), geom = "area", fill = "green", alpha = .3) +

stat_function(fun = function(x) dnorm(x),

xlim = c(4, 1.96), geom = "area", fill = "green", alpha = .3) +

geom_vline(aes(xintercept = 1.96), color = "purple")+

geom_vline(aes(xintercept = -1.96), color = "purple") +

geom_label(aes(x = 1.96, y = .3, label = "?"))+

geom_label(aes(x = -1.96, y = .3, label = "?"))

What if you didn’t know the value?

Code

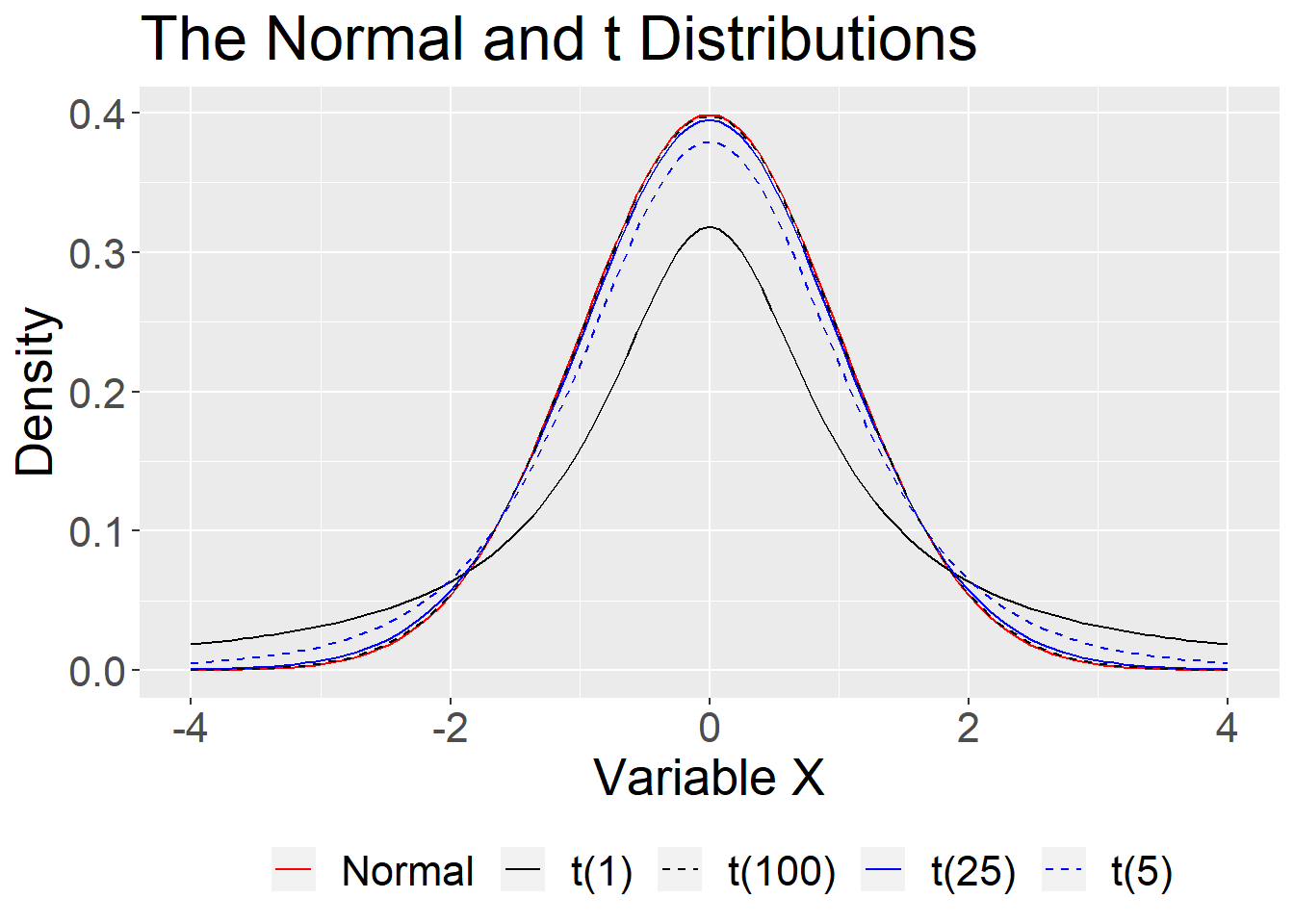

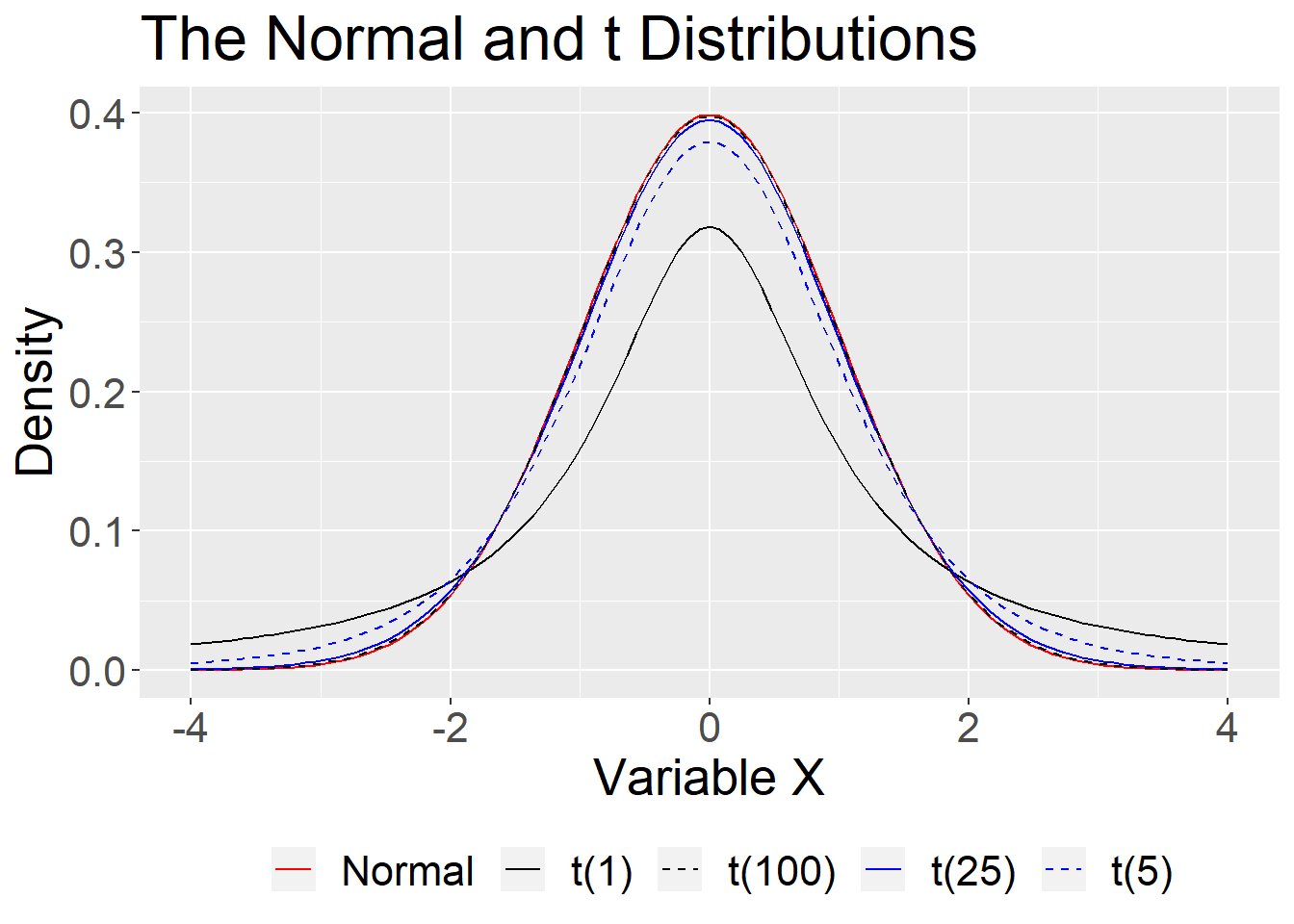

The normal distribution assumes we know the population mean and standard deviation. But we don’t. We only know the sample mean and standard deviation, and those have some uncertainty about them.

Code

ggplot(data.frame(x = seq(-4, 4)), aes(x)) +

stat_function(fun = function(x) dnorm(x),

aes(color = "Normal", linetype = "Normal")) +

stat_function(fun = function(x) dt(x, df = 1),

aes(color = "t(1)", linetype = "t(1)")) +

stat_function(fun = function(x) dt(x, df = 5),

aes(color = "t(5)", linetype = "t(5)")) +

stat_function(fun = function(x) dt(x, df = 25),

aes(color = "t(25)", linetype = "t(25)")) +

stat_function(fun = function(x) dt(x, df = 100),

aes(color = "t(100)", linetype = "t(100)")) +

scale_x_continuous("Variable X") +

scale_y_continuous("Density") +

scale_color_manual("",

values = c("red", "black", "black", "blue", "blue")) +

scale_linetype_manual("",

values = c("solid", "solid", "dashed", "solid", "dashed")) +

ggtitle("The Normal and t Distributions") +

theme(text = element_text(size=20),legend.position = "bottom")

That uncertainty is reduced with large samples, so that the normal is “close enough.” In small samples, the \(t\) distribution provides a better approximation.

Code

ggplot(data.frame(x = seq(-4, 4)), aes(x)) +

stat_function(fun = function(x) dnorm(x),

aes(color = "Normal", linetype = "Normal")) +

stat_function(fun = function(x) dt(x, df = 1),

aes(color = "t(1)", linetype = "t(1)")) +

stat_function(fun = function(x) dt(x, df = 5),

aes(color = "t(5)", linetype = "t(5)")) +

stat_function(fun = function(x) dt(x, df = 25),

aes(color = "t(25)", linetype = "t(25)")) +

stat_function(fun = function(x) dt(x, df = 100),

aes(color = "t(100)", linetype = "t(100)")) +

scale_x_continuous("Variable X") +

scale_y_continuous("Density") +

scale_color_manual("",

values = c("red", "black", "black", "blue", "blue")) +

scale_linetype_manual("",

values = c("solid", "solid", "dashed", "solid", "dashed")) +

ggtitle("The Normal and t Distributions") +

theme(text = element_text(size=20),legend.position = "bottom")

For small samples, we need to use the t distribution with its fatter tails. This produces wider confidence intervals—the penalty we have to pay for our ignorance about the population.

The form of the confidence interval remains the same. We simply substitute a corresponding value from the t distribution (using df =\(N -1\)).

\[CI_{95} = \bar{X} \pm [1.96\frac{\hat{\sigma}}{\sqrt{N}}]\]

\[CI_{95} = \bar{X} \pm [Z_{.975}\frac{\hat{\sigma}}{\sqrt{N}}]\]

\[CI_{95} = \bar{X} \pm [t_{.975, df = N-1}\frac{\hat{\sigma}}{\sqrt{N}}]\]

- The meaning of the confidence interval can be a bit confusing and arises from the peculiar language forced on us by the frequentist viewpoint.

- The CI DOES NOT mean “there is a 95% probability that the true mean lies inside the confidence interval.”

- It means that if we carried out random sampling from the population a large number of times, and calculated the 95% confidence interval each time, then 95% of those intervals can be expected to contain the population mean.

In previous years, incoming first year graduate students had an average coffee consumption of 7.6 cups per week and a standard deviation of 2.4.

The next incoming class will have 43 students. What range of exam means would be plausible if this class is similar to past classes (comes from the same population)?

\[\bar{X} - (1.96\times SEM) \leq \mu \leq \bar{X} + (1.96\times SEM) \]

Calculated with the normal distribution

M <- 7.6

SD <- 2.4

N <- 43

sem <- SD/sqrt(N)

ci_lb_z <- M - (sem * qnorm(p = .975))

ci_ub_z <- M + (sem * qnorm(p = .975))

c(ci_lb_z, ci_ub_z)[1] 6.88266 8.31734Calculated with the t-distribution

M <- 7.6

SD <- 2.4

N <- 43

sem <- SD/sqrt(N)

ci_lb_t <- M - (sem * qt(p = .975, df = N-1))

ci_ub_t <- M + (sem * qt(p = .975, df = N-1))

c(ci_lb_t, ci_ub_t)[1] 6.861389 8.338611What do you notice about the two?

Lab 2 Time

Using Rainbow Parentheses

Visual Editor & Knitting document